Introduction

To have a beautiful character on a screen, two important elements are needed: A smooth geometry and a Texture. A Texture is the image that clothes the character and gives it a personality.

A texture is an image that is transferred to the GPU and is applied to the character during the final stages of the rendering pipeline. Texture Parameters and Texture Coordinates ensure that the image fits the character’s geometry correctly.

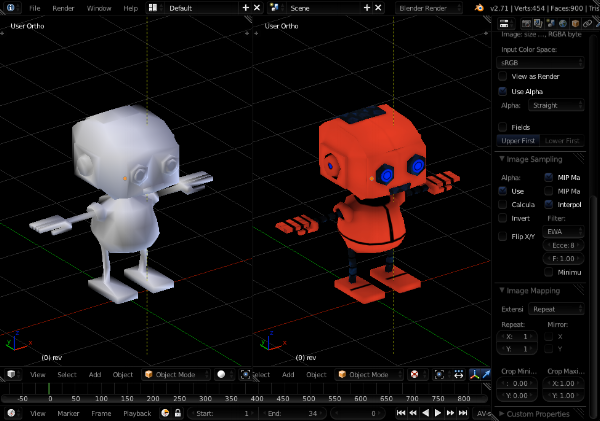

Figure 1. A character with texture applied.

Providing the texture

Every character in a mobile game contains a texture. Applying a texture is the equivalent to painting. Whereas you would paint a drawing section by section, a texture is apply to a character fragment by fragment in the Fragment Shader.

So, who provides the texture? Let’s revisit the made up Game Studio composed of a graphics designer (Bill) and a programmer (Tom), their daily routine may be something like this:

Bill starts his day by opening up Blender , a modeling software he uses to model 3D characters. His task is to model a shiny robot-like character with black gloves, silver helmet, and white torso. After two hours, he has completed the geometry of the robot. His next task is to do what is known as Unwrapping .

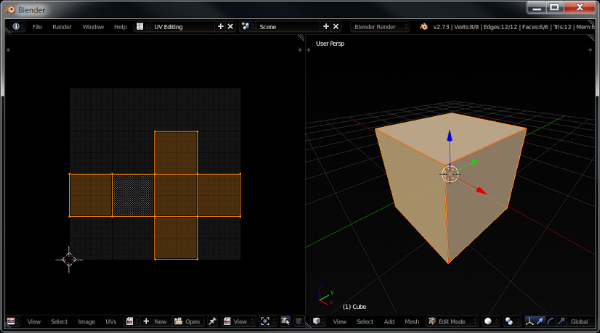

Unwrapping means to cut the 3D character and Unwrap it to form a 2D equivalent of the character. The result is called a UV Map. Why does he do this? because an image is a two-dimensional entity. By unwrapping the 3D character into a 2D entity, an image can be applied to the character. Figure 2 shows an example of a cube unwrapped.

Figure 2. Unwrapping a cube into its UV Map.

The unwrapping of the character is essentially a transformation from a 3D coordinate system to a 2D coordinate system called UV Coordinate System. This process is referred as UV Mapping.

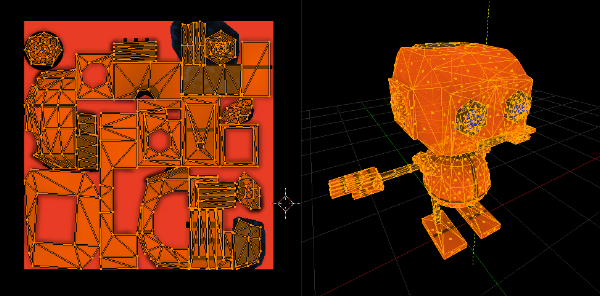

Figure 3. Unwrapping a 3D model into its UV Map.

Let’s continue reading Bill’s workflow:

Once Bill has unwrapped the character, he takes the UV-Map of the character and exports it to an image editor software, like Photoshop or GIMP. The UV-Map will serve as a road map to paint over. Here, Bill will be able to paint what represents the gloves of the robot to Black; the helmet to Silver and the torso to White . At the end, he will have a 2D image that will be applied to a 3D character. This image is called a Texture.

It is this image texture that will be loaded in your application and transferred to the GPU.

Figure 4. Character's UV map with texture (image).

A new coordinate system

During the unwrapping process, the Vertices defining the 3D geometry are mapped onto a UV Coordinate system. A UV Coordinate System is a 2D dimensional coordinate system whose axes, U and V, ranges from 0 to 1.

For example, figure 2 above shows the UV Map of a cube. The vertices of the cube were mapped onto the UV Coordinate system. A vertex with location of (1,1,1) in 3D space, may have been mapped to UV-Coordinates of (0.5,0.5).

During the application of the texture, the Fragment Shader uses the UV-Coordinates as guide-points to properly attach the texture to the 3D model.

A reference to the texture

Once a texture has been exported, it can be sent to the GPU for processing. Texture images, like vertices, are sent to the GPU through OpenGL Objects. These objects are then processed by the Fragment Shader in the Per-Fragment stage of the pipeline.

OpenGL Objects have a very interesting property. Once created and bound, any subsequent OpenGL operation affects only the Bound object. If you were to create and bind object A, then create but not bind object B; the next OpenGL operation will only apply to object A. This property is important to keep in mind as we work with textures.

Before we create an OpenGL Object, OpenGL requires the activation of a Texture-Unit. A texture-unit is the section in the GPU responsible for Texturing Operations.

Once a texture-unit has been activated an OpenGL Object can be created. OpenGL objects designed to work with textures are called Texture Objects. Like any other OpenGL Object, Texture Objects require its behavior to be specified.

Texture Objects can either behave as objects that carry two-dimensional images or Cube Maps images. Cube Maps images are a collection of six 2D-images that form a cube; they are used to provide a sky scenery in a game.

Images are normally exported in .png, .tga or .jpeg formats. However, these image-compression formats are not accepted in OpenGL. Therefore, images must be decompressed to a raw-format before they can be loaded to a texture object. Unfortunately, the OpenGL API does not provide any decompression utility. Fortunately, there exist various of these utilities which can be integrated in your application very easily.

Once a Texture-Unit has been activated, a Texture Object created, bound and its behavior defined, Texture Parameters can be defined. Texture Parameters informs the GPU how to glue the texture to the geometry.

Applying the texture

Textures are applied to a geometry, fragment by fragment, in the Fragment Shader. With a reference to the Texture-Unit, texture image and UV-Mapping coordinates, the fragment shader is able to attach the texture to the geometry as specified in the texture parameters.

Figure 5. A character with texture on a mobile device

Hope it helps.