Creating and Initializing Textures

Texture in OpenGL represents the image that may wrap a character. For example, the image below shows a character without texture and one with texture.

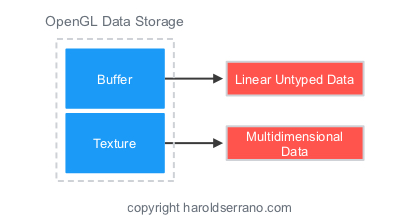

In OpenGL there are two types of data storage:

- Buffers

- Textures

Buffers are linear blocks of untyped data and can be seen as generic memory allocations. Textures are multidimensional data, such as images.

In OpenGL, attribute data such as:

- Vertex Position

- Normals

- U-V coordinates

are stored in OpenGL Buffers. In contrast, image data is stored in OpenGL Texture Objects.

To store image data into an texture object you must:

- Create a Texture Object

- Allocate Texture Storage

- Bind the Texture Object

We use the following functions to create, bind, and allocate texture storage.

Listing 1

//create a new texture object

glCreateTextures(...);

//Allocate texture storage

glTexStorage2D(...);

//Bind it to the GL_Texture_2D target

glBindTexture(...);

Here is a snippet of the steps required to create a texture object, allocating storage, and bind it to the OpenGL context:

Listing 2

//The type used for names in OpenGL is GLuint

GLuint texture;

//Create a new 2D texture object

glCreateTextures(GL_TEXTURE_2D,1,&texture);

//Specify the amount of storage we want to use for the texture

glTextureStorage2D(texture, //Texture object

1, //1 mimap level

GL_RGBA32F, //32 bit floating point RGBA data

256,256); //256 x 256 texels

//Bind it to the OpenGL Context using the GL_TEXTURE_2D binding point

glBindTexture(GL_TEXTURE_2D, texture);

After your texture object is bound, you can load data into the Texture. Data into a texture is loaded with the function:

Listing 3

//function to load texture data

glTexSubImage2D(...)

The following code snippet shows how you would load data into the texture object:

Listing 4

//Define some data to upload into the texture

float *data=new float[256*256*4];

glTexSubImage2D(texture, //Texture object

0, //level 0

0,0, //offset 0,0

256,256, //256 x 256 texels

GL_RGBA, //Four channel data

GL_FLOAT, //Floating point data

data); //Pointer to data

Texture Targets and Types

One of the first problems that I had with OpenGL textures was understanding the use of Targets. It turns out that Targets are quite simple and straightforward. A texture Target determines the Type of the texture object.

For example, the texture we have created is a 2D texture. Thus, the texture object is bound to a 2D texture Target, GL_TEXTURE_2D. If you had a 1D texture, then you would bind the texture object to a 1D texture target, GL_TEXTURE_1D.

Since you will be using images, you will find yourself using GL_TEXTURE_2D quite a lot. This is because images are 2D representation of data.

Reading from Textures in Shaders

Once a texture object is bound and contains data, it is ready for a shader to use it. In shaders, textures are declared as uniform Sampler Variables.

For example:

Listing 5

uniform sampler2D myTexture;

The Sampler dimension corresponds to the texture dimensionality. Our texture is a 2D texture, thus it must be represented by a 2-dimensional sampler. The sampler type that represents 2-dimensional textures is sampler2D.

If your texture is 1D, the sampler to use is sampler1D. If the texture is 3D, the sampler to use is sampler3D.

Texture Coordinates

A Sampler represents the texture and sampling parameters. Whereas, texture coordinates represents coordinates which ranges from 0.0 to 1.0. These two set of information is what we need to apply a texture to an object. You already know how to represent a texture in a shader, i.e. using Sampler. But how do you get the texture coordinates in shaders?

Character data is sent to the GPU through OpenGL buffers. You load these buffers with data representing the attributes of your character. These attributes can be vertices position, normals or texture coordinates. Texture coordinates are also known as UV coordinates.

A Vertex Shader receives this information through variables known as Vertex Attributes. Only the Vertex Shader can receive Vertex Attribute data. The tessellation, geometry and fragment shader can't. If these shaders need this data, you must pass it down from the vertex shader.

Thus, the vertex shader receives the texture coordinates through vertex attributes variables. It then passes the coordinates down to the fragment shader. Once the coordinates are in the fragment shader, OpenGL can apply the texture to an object.

Listing 6 shows the texture coordinates declared as vertex attribute. In the main function, the coordinates are passed to the fragment shader unmodified.

Listing 6

#version 450 core

//uniform variables

uniform mat4 mvMatrix;

uniform mat4 projMatrix;

//Vertex Attributes for position and UV coordinates

layout(location = 0) in vec4 position;

layout(location = 1) in vec2 texCoordinates;

//output to fragment shader

out TextureCoordinates_Out{

vec2 texCoordinates;

}textureCoordinates_out;

void main(){

//calculate the position of each vertex

vec4 position_vertex=mvMatrix*position;

//Pass the texture coordinate through unmodified

textureCoordinates_out.texCoordinates=texCoordinates;

gl_Position=projMatrix*position_vertex;

}

Listing 7 shows how the function texture() uses the sampler information and the texture coordinates to apply the image to a fragment.

Listing 7

#version 450 core

//Sampler2D declaration

layout(binding = 0) uniform sampler2D textureObject;

//Input from vertex shader

in TextureCoordinates_Out{

vec2 textureCoordinates;

}textureCoordinates_in;

//Output to framebuffer

out vec4 color;

void main(){

//Apply the texture with the given coordinates and sampler

color=texture(textureObject,textureCoordinates_in.textureCoordinates;

}

Controlling how texture data is read

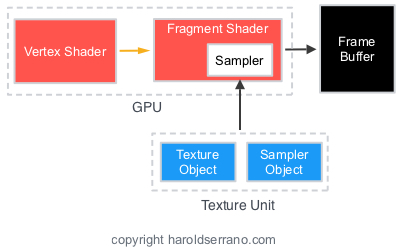

Samplers retrieve texture data found in Texture Units. A texture unit contains a Texture Object and a Sampler Object. You already know what a texture object is. Lets talk a bit about sampler objects.

Texture coordinates ranges between 0.0 and 1.0. OpenGL lets you decide what to do with coordinates that fall outside this range. This is called Wrapping Mode. OpenGL also lets you decide what to do when pixels don't have a 1 to 1 ratio with texels on a texture. This is called Filtering Mode. A sampler object stores the wrapping and filtering parameters that control a texture.

A sampler requires both the texture object and the sampler object to bind to a texture unit. When this set of data is complete, the sampler has all the information required to apply a texture.

In special circumstances, you would create a sampler object and bind it to a texture unit. But most of the time, you won't have to create a sampler object. This is because a texture object has a default sampler object which you can use. The default sampler object has a default wrapping/filtering mode parameters.

To access the default sampler object stored inside a texture object, you can call:

Listing 8

//accessing the default sampler object in a texture object and setting the sampling parameters

glTexParameter()

Before you bind a texture object to a texture unit, you must activate a texture unit. This is accomplished by calling the following function, with the texture unit which you want to use:

Listing 9

//Activate Texture Unit 0

glActiveTexture(GL_TEXTURE0);

I hope this post has been helpful. If you need me to clarify something, let me know. If you want to get your hands dirty with OpenGL Textures, do the project found in How to Apply Textures to a Game Character using OpenGL ES.

PS. Sign up to my newsletter and get OpenGL development tips.