Developing a Rendering Engine requires an understanding of how OpenGL and GPU Shaders work. This article provides a brief overview of how OpenGL and GPU shaders work. I will start by explaining the three main type of data that are sent to the GPU. Then I will give you a brief overview about Shaders. And finally, how shaders are used to create visual effects.

Sending Data to the GPU

To render a pixel on a screen you need to communicate with the GPU. To do so, you need a medium. This medium is called OpenGL.

OpenGL is not a programming language, it is an API whose purpose is to take data from the CPU to the GPU. Thus, as a computer graphics developer, your task is to send data to the GPU through OpenGL objects.

Once data reaches the GPU, it goes through the OpenGL Rendering Pipeline. It is through this pipeline that your game character is assembled.

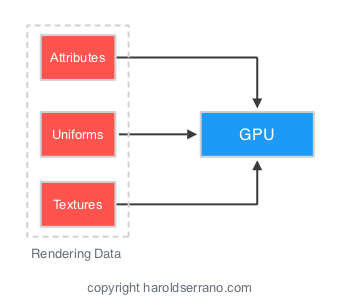

To do this, the GPU requires three set of data:

- Attributes

- Uniforms

- Texture

Attributes

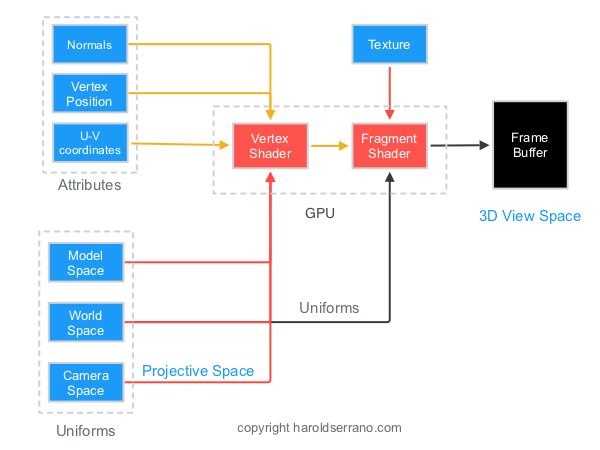

Attributes are used by the GPU to assemble a geometry, apply lighting and images to a game character. The most common attributes are:

- Vertex positions

- Normals coordinates

- U-V coordinates

Vertex positions are used by the GPU to assemble the character’s geometry. Normals are vectors perpendicular to a surface and are used to apply Light to a character. The U-V coordinates are used to map an image to the character.

Uniforms

Uniforms provide spatial data to the GPU. Normally, three set of spatial data are needed to render a character on a screen. These are:

- Model Space

- World Space

- Camera Space

These spaces informs the GPU where to position a game character relative to the screen.

Texture

A texture is a 2-D image which is use to wrap a character.

Inside the GPU

A GPU consists of four shaders known as:

- Vertex Shader

- Fragment Shader

- Tessellation Shader

- Geometry Shader

A shader is a program which lives in the GPU. They are programmable and allows the manipulation of geometry and pixel color.

Not all data is received by the GPU the same way. For example, attribute data can only be received by Vertex Shaders. Uniforms can be received by Vertex and Fragment Shaders. And Textures are normally received by Fragment Shaders.

Once data is in the GPU, it is processed by the OpenGL Rendering Pipeline.

The Rendering Pipeline process the data through several stages known as:

- Per-Vertex Operation

- Primitive Assembly

- Primitive Processing

- Rasterization

- Fragment Processing

- Per-Fragment Operation

Per-Vertex Operation

In the first stage, called Per-Vertex Operation, vertices are processed by the Vertex Shader.

Each vertex is transformed by a space matrix, effectively changing its 3D coordinate system to a new coordinate system. Just like a photographic camera transforms a 3D scenery into a 2D photograph.

Primitive Assembly

After three vertices have been processed by the vertex shader, they are taken to the Primitive Assembly stage.

This is where a primitive is constructed by connecting the vertices in a specified order.

Primitive Processing

Before the primitive is taken to the next stage, Clipping occurs. Any primitive that falls outside the View-Volume, i.e. outside the screen, is clipped and ignore in the next stage.

Rasterization

What you ultimately see on a screen are pixels approximating the shape of a primitive. This approximation occurs in the Rasterization stage. In this stage, pixels are tested to see if they are inside the primitive’s perimeter. If they are not, they are discarded.

If they are within the primitive, they are taken to the next stage. The set of pixels that passed the test is called a Fragment.

Fragment Processing

A Fragment is a set of pixels approximating the shape of a primitive. When a fragment leaves the rasterization stage, it is taken to the Per-Fragment stage, where it is received by the Fragment Shader. The responsibility of this shader is to apply color or a texture to the pixels within the fragment.

Per-Fragment Operation

Finally, fragments are submitted to several tests like:

- Pixel Ownership test

- Scissor test

- Alpha test

- Stencil test

- Depth test

At the end of the pipeline, the pixels are saved in a Framebuffer, more specifically the Default-Framebuffer. These are the pixels that you see in your mobile screen.

Basic Shader Operation

Shaders provide great flexibility on manipulating the image you see on a screen. At its simplest, the most basic OpenGL operation requires:

- Vertex Position (Attribute)

- Model-World-View Space (Uniforms)

The vertex shader receives the attribute data, transforms it with the Model-World-View space and provides an output to the fragment shader. The fragment shader then colors the fragment.

Manipulating 2D/3D Views with Uniforms

There are two ways to set up a Perspective Space. It can be configured with an Orthogonal-Perspective,

thus producing a 2D image on the framebuffer, as shown below:

Or it can be set with a Projective-Perspective space

thus generating a 3D image on the framebuffer, as illustrated below:

Applying textures to a game character

The image below shows a character with and without texture.

In order to map a texture onto an object, you need to provide U-V coordinates and a Sampler to the fragment shader. However, since U-V data is an attribute, it must go through the Vertex Shader first. The U-V coordinates are then passed down to the fragment shader.

A Texture is simply an image. In OpenGL, an image is sent to the GPU through Texture Objects. This Texture Object is placed in a Texture-Unit. The fragment shader references this texture-unit through a Sampler.

The fragment shader then uses the U-V coordinates along with the sampler data to properly map an image to the game character.

Applying Light to a game character

Light is everything. Without it, we could not see the world around us. In a similar fashion, without the simulation of light in computer graphics, we could not see the characters in a game.

To simulate light, a GPU requires the Vertex Position and the Normals coordinates of a character. Normals are vectors perpendicular to a surface and are used to apply lighting effects to the character. Normals are attributes and are passed down to the GPU through OpenGL buffers.

Light is simulated in OpenGL shaders. It can be simulated in either the Vertex or Fragment shaders. However, I recommend to simulate light in the fragment shader, since it produces a more realistic effect.

Understanding Light in Computer Graphics

Your task as a computer graphics developer is to simulate four different categories of light:

- Emissive Light

- Ambient Light

- Diffuse Light

- Specular Light

Emissive Light

Emissive light is the light produced by the surface itself in the absence of a light source. It is simply the color and intensity which an object glows.

Ambient Light

Ambient light is the light that enters a room and bounces multiple times around the room before lighting a particular object. Ambient light contribution depends on the light’s ambient color and the ambient’s material color.

The light’s ambient color represents the color and intensity of the light. The ambient’s material color represents the overall ambient light the surface reflects.

Diffuse Light

Diffuse light represents direct light hitting a surface. The Diffuse Light contribution is dependent on the incident angle. For example, light hitting a surface at a 90 degree angle contributes more than light hitting the same surface at a 5 degrees.

Diffuse light is dependent on the material colors, light colors, illuminance, light direction and normal vector.

In practice, diffuse light would produce this type of effect:

Specular Light

Specular light is the white highlight reflection seen on smooth, shinny objects. Specular light is dependent on the direction of the light, the surface normal and the viewer location.

In practice, specular light would produce this type of effect:

By simulating these Light types in a shader, you can produce the following effect:

Conclusion

With this knowledge, you can create your own Rendering Engine. A rendering engine consists of a Rendering Manager. This manager is in charge of sending data to the GPU and activating the correct shaders. Doing so, allows you to have C++ classes that take care of rendering 3D, 2D and cube maps objects.

I recommend you to watch the video below before you develop your Rendering Engine.

I hope this article helped.

PS. Sign up to my newsletter and get Game Engine development tips.